Measuring Bits and Gravitational Waves

How do you measure gravitational waves? How does that compare to counting bits in networking?

In June 2017, IWL Staff attended a fascinating lecture by Dr. Jess McIver of CalTech titled "Einstein Gravitational Waves and Black Holes". Dr. McIver described the operation of the LIGO project for detecting gravitational waves.

So when an article appeared on the history of the early efforts to detect gravitational waves, ultimately resulting in LIGO, we eagerly read it:

The Experiment

In 1969, Dr. Joseph Weber, a physicist at the University of Maryland, published a paper on his method for detecting gravitational waves. His mechanism involved suspending two six-ton aluminum bars in a vacuum – in Maryland and Illinois. If the bars detected a gravitational wave, they would resonate two octaves above middle C. Weber declared success, however, when others tried to duplicate the experiment, they could not.

Interferometers and Lasers

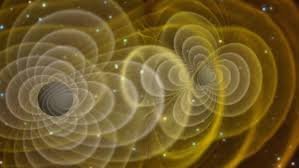

Probably inspired by Weber’s experiment, other physicists tried other mechanisms, including interferometers and lasers. The approach using lasers resulted in the LIGO project. To date there is general agreement that the LIGO mechanism works and gravitational waves have been detected and reported.

According to the article, Dr. Weber’s setup was insufficiently sensitive for the task.

Issues with Measurement in Science and Engineering

The Wikipedia entry for Accuracy and Precision provides a great summation of the issue:

In the fields of science and engineering, the accuracy of a measurement system is the degree of closeness of measurements of a quantity to that quantity's true value. The precision of a measurement system, related to reproducibility and repeatability, is the degree to which repeated measurements under unchanged conditions show the same results.Although the two words precision and accuracy can be synonymous in colloquial use, they are deliberately contrasted in the context of the scientific method.

Based on the Wikipedia definition, Weber’s issue was one of precision, as his findings were not reproducible or repeatable as indicated above.

In our Counting Bits blog post,we examine tools to obtain network performance measurements. Often one cannot tell what bits are counted (and/or NOT counted) by the tool. Thus, we cannot compare measurements, when we are not sure what was measured. Here the issue is one of accuracy. How does the quantity measured (the number of bits transmitted and received) compare to the quantity’s true value?

Taking this a step further, if the computer networking industry had a clear definition of what is measured, only then could we address the accuracy of the measurements.

Isn’t it time to agree on definitions?

Our Thanks to Joel Shurkin for introducing us to this story.

Photo Credit: ligo.caltech.edu